We’ve all seen the magic online: someone scribbles a rough doodle, feeds it to an AI, and gets back a masterpiece. The promise is amazing, especially for hobbyists or artists who want to visualize ideas fast. But here’s the thing: when I tried it, the results were all over the place. I’d draw a cat on a fence, and the AI would give me a cat near a fence, or just a very “fence-like” cat. The AI didn’t seem to understand my sketch at all. This frustration sparked an experiment. I decided to take the exact same sketch and run it through the top sketch-to-art tools to see which one could actually follow instructions.

As an author, Mahnoor Farooq, I’ve been exploring and writing about these creative AI tools for years. My main drive is curiosity. I love digging into how these tools “think” and finding practical ways to use them in a real creative process. I’m not a fan of exaggerated hype; I’d rather run the tests myself and share what I find, good or bad. This experiment isn’t about finding the “best” tool, but about understanding which tool is right for which job. For me, the real magic is sharing that knowledge so others can skip the frustration and get straight to creating.

Setting Up the Experiment: The Sketch and the Contenders

To make this a fair test, I needed a consistent sketch and a clear prompt. My goal was to see how well the AI could interpret composition, not just style.

The Test Subject: My Simple Drawing

I didn’t want to create a complex masterpiece. The point was to test a simple, clear concept. So, I drew a basic line drawing.

The Sketch: A simple, cartoonish cat with a long tail, sitting on a crescent moon. The cat is holding a small, five-pointed star in one of its front paws.

This sketch is perfect for a test because it has three distinct objects (cat, moon, star) with clear relationships. The cat isn’t just near the moon; it’s on it. The cat isn’t just with the star; it’s holding it. This tests the AI’s grasp of spatial relationships and composition.

The AI Tools on Trial

I chose three popular and powerful tools, all known for their image-generation skills but with very different approaches:

- Midjourney: Famous for its stunning, artistic, and often painterly results. It’s known more for its text-to-image power, but its image-prompting feature is what I’m testing.

- Stable Diffusion (with ControlNet): This is the “technical” option. Stable Diffusion on its own is a powerful model, but the ControlNet add-on is specifically designed to “control” the generation by locking it to a source image, like a sketch.

- DALL-E 3 (via ChatGPT): Known for its incredible ability to understand natural language prompts and its tight integration with ChatGPT, which allows for conversational edits.

The Control Prompt: Keeping Things Fair

To give every AI the same starting point, I used the exact same text prompt to accompany my sketch:

“A simple digital art drawing of a cat sitting on a crescent moon, holding a star.”

This prompt clearly describes the sketch, reinforcing the visual information. The experiment is simple: upload the sketch, use this prompt, and see what happens.

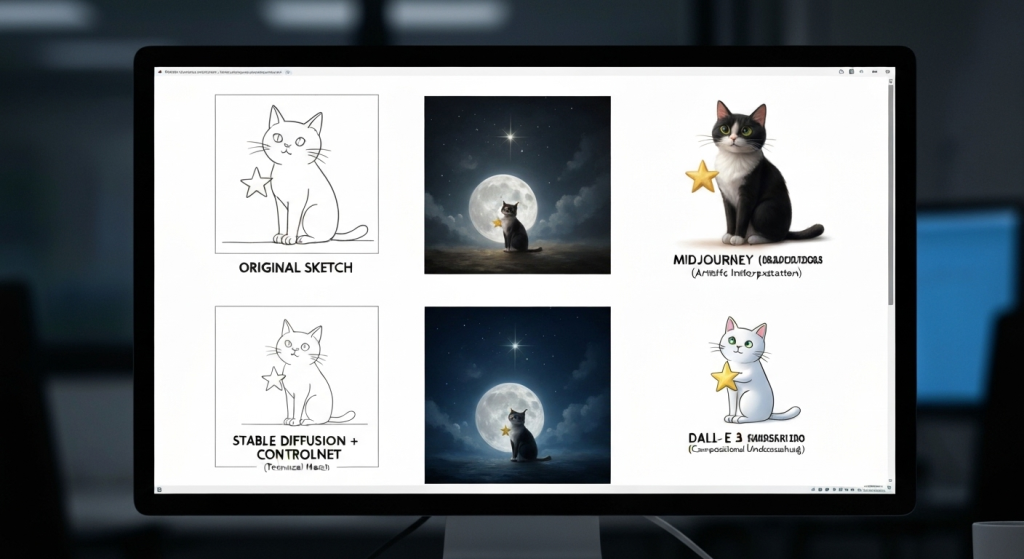

The Results: A Side-by-Side Breakdown

This is where things got really interesting. Each tool gave me a wildly different result, revealing exactly what it was built for.

Tool 1: Midjourney’s Artistic Interpretation

How it works: In Midjourney (which I use on Discord), I uploaded my sketch to get a URL. Then, I typed /imagine, pasted the image URL, and added my text prompt. I also used the image weight parameter (--iw 2.0) to tell Midjourney to pay close attention to my sketch.

The Result (The “After”): The images were, without a doubt, beautiful. They were artistic, moody, and had gorgeous lighting. However, Midjourney treated my sketch like a suggestion, not an order.

- In most results, the cat was beautiful, but it was sitting next to the moon.

- The star was often a sparkle in the sky, not something the cat was holding.

- The AI seemed to grab the keywords—cat, moon, star—and then generated its own, more “artistically balanced” composition. It completely ignored the “holding” and “sitting on” parts of my sketch.

My Take: Midjourney is an inspirer. It’s not the tool for faithfully recreating your drawing. It’s what you use when you have a vague idea (like “cat and moon”) and you want the AI to show you a beautiful, unexpected way to bring it to life. It failed my test of understanding the sketch’s composition, but it succeeded in creating a stunning piece of art.

Tool 2: Stable Diffusion + ControlNet (The Technician)

How it works: This one has more steps. I used a web interface for Stable Diffusion (like Automatic1111). I loaded a base model (a general art style), then I enabled the “ControlNet” module. I selected the “Scribble” pre-processor, which is perfect for hand-drawn sketches. I uploaded my sketch into the ControlNet slot and put my text prompt in the main prompt box.

The Result (The “After”): The result was shocking in its accuracy.

- The cat was perfectly positioned on the moon, exactly where I drew it.

- The crescent shape was correct.

- The cat was holding the star in its paw.

- ControlNet essentially “locked” the composition, forcing the main Stable Diffusion model to “color in” my lines accordingto the text prompt.

The Catch: While the composition was 100% correct, the artistic style was only as good as my text prompt and the base model I chose. My first try looked a bit flat and “computery.” It took more tweaking of the text prompt (like adding “vibrant colors, glowing, fantasy art”) to get a result that felt as “alive” as Midjourney’s.

My Take: ControlNet is the technician. It does exactly what you tell it to do. It has the steepest learning curve, but it is the only tool that truly understood and respected my original drawing. This is the tool for artists and designers who have a specific vision and need the AI to follow it precisely.

Tool 3: DALL-E 3 (The Creative Partner)

How it works: This was the easiest. I use DALL-E 3 through my ChatGPT Plus subscription. I simply clicked the paperclip icon, uploaded my sketch, and typed: “Use this sketch as a reference and create: ‘A simple digital art drawing of a cat sitting on a crescent moon, holding a star.'”

The Result (The “After”): DALL-E 3 was the perfect middle ground.

- It clearly analyzed the sketch. In all four images it gave me, the cat was sitting on the moon.

- In three out of the four, the cat was holding the star. The fourth one had the star floating just above its paw, which was a minor miss.

- The style was playful, clean, and exactly matched the “simple digital art” prompt. It didn’t have the moody-art-school vibe of Midjourney, but it was much more polished than my first Stable Diffusion attempts.

My Take: DALL-E 3 is the creative partner. It’s smart enough to understand the intent of your sketch and your prompt. It respects your core composition but still adds its own creative flair to make it look good. For a hobbyist or someone who wants a reliable, easy-to-use tool, this was the clear winner for balancing accuracy and aesthetics.

Comparison Table: Which Tool Wins for What?

To make this simple, here’s a breakdown of how each tool stacked up in my experiment.

| Feature | Midjourney | Stable Diffusion (with ControlNet) | DALL-E 3 (via ChatGPT) |

| Sketch Accuracy | Low. Ignored composition for aesthetics. | Very High. Locked to the sketch lines perfectly. | High. Understood and respected the main composition. |

| Artistic Quality | Very High. Beautiful, moody results out-of-the-box. | Variable. Depends heavily on your prompt and model. | Good to High. Clean, polished, and follows the prompt style. |

| Ease of Use | Moderate. Requires Discord and prompt commands. | Low to Moderate. Has a steep learning curve and setup. | Very High. As easy as uploading a file in a chat. |

| Control & Customization | Low. You can adjust image weight, but that’s it. | Very High. Full control over every part of the process. | Moderate. You can “talk” to it to make changes. |

| Best For… | Inspiration and mood boarding. | Artists who need 1:1 reproduction of a sketch. | Hobbyists and quick, reliable visualization. |

Why Do AI Tools ‘Misunderstand’ Our Sketches?

This experiment showed me that these tools don’t “see” sketches like humans do. My years of working with these models have taught me that they are all about patterns, not genuine understanding.

Different Training, Different Skills

What this really breaks down to is how the AI was trained.

- Midjourney is trained on billions of beautiful images and their text descriptions. It’s heavily biased toward making things look good. When it sees my sketch and prompt, it thinks: “I know what a beautiful picture of a ‘cat,’ ‘moon,’ and ‘star’ looks like, and it’s not this. I’ll make one of those instead.” The sketch is just a light suggestion.

- ControlNet is a separate, smaller network. It was specifically trained on one job: find lines (or poses, or depth) in a source image and force the main AI to follow them. Its entire purpose is to remove the AI’s “creative freedom” and make it obey the source. For a deep dive, you can check out the original research paper on ControlNet (warning: it’s very technical, but shows the computer science behind it).

- DALL-E 3 is part of a large language model (like ChatGPT). This means it has a much stronger grasp of language and relationships. When I say “cat on moon,” it understands that spatial relationship better than Midjourney does. It can look at the sketch and my prompt and logically piece them together.

The Power of the Prompt

I also found that the text prompt is almost always more powerful than the sketch. If I only uploaded my sketch with no text, the results from all tools were chaotic and strange. The text prompt “A cat sitting on a crescent moon” is what anchors the idea. The sketch just guides where those ideas should go.

My Tips for Getting AI to Respect Your Drawing

After all this testing, I’ve developed a clear set of rules for getting better results. If you’re a digital artist or hobbyist, these tips will save you a lot of time.

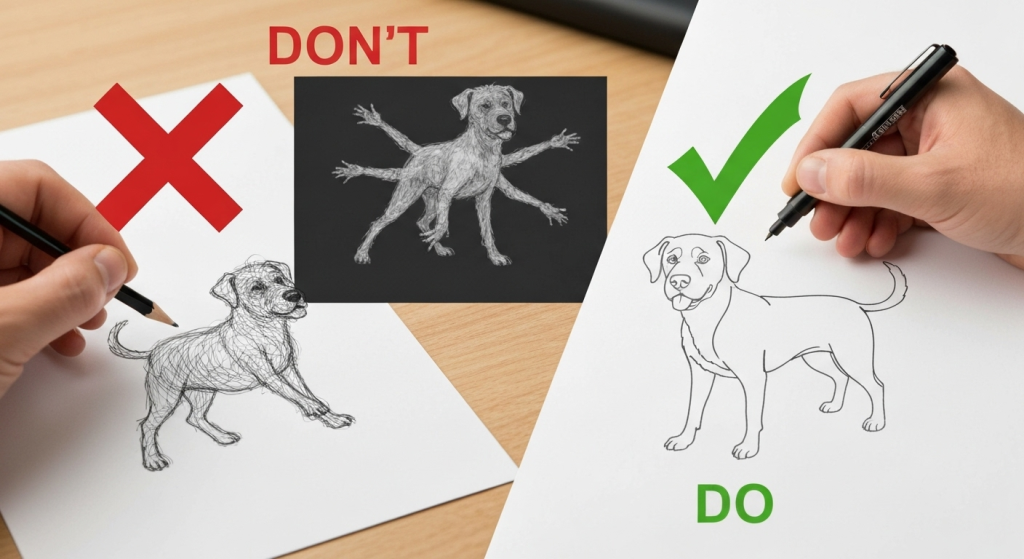

Tip 1: Clean Up Your Lines

Messy, “hairy” sketches are confusing for the AI. It can’t tell which line is the “real” one. The best results come from a clean, single-line drawing, almost like a coloring book page. Before you upload, erase any smudges or extra sketchy lines. A clear black line on a plain white background is perfect.

Tip 2: Use a “Control” Tool if You Can

If you are serious about bringing a specific sketch to life, you have to use a tool that gives you precision. As my experiment showed, Stable Diffusion with ControlNet is the gold standard for this. It’s the only way to guarantee your composition stays locked in place. It takes time to learn, but the results are worth it if you need that level of control.

Tip 3: Be Specific With Your Text Prompt

Don’t just upload the sketch and hope for the best. You must tell the AI what it is looking at. Don’t just say “a cat.” Say “A line art sketch of a cat, digital art.” This helps the AI categorize the image and understand what to do with it. If your tool allows it (like Midjourney or Stable Diffusion), increase the “weight” or “strength” of your sketch to tell the AI to pay more attention to it.

Tip 4: Iterate, Iterate, Iterate

My experience has taught me one big lesson: the first generation is almost never the final one. See the first result as a starting point.

- Is the style wrong? Tweak the prompt (e.g., from “digital art” to “oil painting”).

- Is it ignoring your sketch? Increase the sketch’s “strength” or “weight.”

- Is it too slavish to your sketch? Decrease the strength to give the AI more creative freedom.

This process of tweaking and re-running is where the real art happens.

Pros and Cons: Sketch-to-Art Generation

Based on this experiment, here are the simple pros and cons of this technology as it stands today.

| Pros | Cons |

| Speed: You can visualize a complex, rendered idea in seconds from a simple doodle. | Frustration: The AI can stubbornly ignore your composition, which feels like it’s “not listening.” |

| Empowerment: Great for people who can draw but struggle with digital painting, coloring, or shading. | Generic Style: If you’re not careful, the AI’s default style can overshadow your own personal style. |

| Brainstorming: You can get 10 different stylistic variations of your sketch in a minute, breaking “artist’s block.” | Learning Curve: The best, most precise tools (like ControlNet) are the hardest to learn and set up. |

Frequently Asked Questions (FAQs)

What’s the best free AI for turning sketches into art?

Many free web-based Stable Diffusion tools include a “Scribble-to-Image” or ControlNet feature. They are a great place to start. DALL-E 3 is also available for free through Microsoft’s Bing Image Creator, which is an excellent, easy-to-use option.

Can the AI copy my art style from a sketch?

Not really from a simple line sketch. The AI will apply its own style (or the one you ask for in the prompt) to your composition. To copy your specific style, you would need to fine-tune a model on many examples of your finished artwork, which is a much more advanced process.

Why does the AI add weird things that weren’t in my drawing?

This is often the AI “hallucinating.” It might misinterpret a sketchy line as an object (like a smudge becoming a tree) or it might add things that are commonly associated with your prompt (like adding stars to a “night sky” prompt even if you didn’t draw them).

Do I need a drawing tablet to make AI sketches?

Absolutely not! As my experiment showed, a simple line drawing works best. You can get great results by just drawing something with a black pen on white paper and taking a clear, well-lit photo of it with your phone.

Final Thoughts: The Artist and The Tool

So, which tool actually understood my drawing?

The answer is complicated. Midjourney didn’t understand it, but it gave me a beautiful, inspirational alternative. DALL-E 3 understood the idea and acted like a helpful creative partner. Stable Diffusion with ControlNet was the only one that understood my exact lines and followed them like a skilled apprentice.

What I learned is that there isn’t one “best” tool. The best tool depends entirely on your goal. My experiment confirmed that this technology isn’t a simple “magic” button. It’s a set of powerful, specialized instruments. My experience over the years has shown that the real skill is learning which instrument to pick for the job at hand. Do you need an inspirer, a partner, or a technician?