AI image generators are amazing. You can type a few words and create a stunning portrait, a fantasy character, or a professional headshot. But then comes the hard part. You try to use that same person again. You ask for a new pose, a different expression, or a change of clothes, and… you get a stranger. This “consistency gap” is one of the biggest challenges for creators today. If you want to create a brand mascot, a comic book character, or even just a set of matching profile pictures, you need the face to stay the same.

So, how consistent are AI faces right now? I decided to put it to the test. I’m not just talking about theory; I ran a hands-on experiment. I took one specific prompt and ran it through three of the biggest AI portrait generators to see which one could keep a face consistent. The results were not what I expected.

My name is Mahnoor Farooq. For the past several years, I’ve been fascinated by creative AI tools. My work involves diving deep into these generators, running experiments, and figuring out what works (and what doesn’t). I’m not a developer, but I am a dedicated user who loves to see how far we can push this technology. I spend my time testing prompts, comparing models, and sharing what I learn with other creators. This article is a direct result of that passion—a real-world test to help you choose the right tool for your project.

What Do We Even Mean by “Facial Consistency”?

Before we dive into the tests, let’s get on the same page. “Facial consistency” is more than just “looking similar.” When I test for consistency, I am looking for four specific things. These were my scoring criteria for this experiment.

- Identity Preservation: This is the big one. Does it look like the same person? The bone structure, the shape of the eyes, the bridge of the nose, and the set of the jaw should all match. Many AIs will give you “sisters” or “brothers”—people who share features but are clearly not the same individual.

- Feature Stability: Do the small details stick around? If the character has light freckles, a small scar, or a specific gap in their teeth, does that detail appear every single time? This is where many models fail.

- Expression and Angle Control: If I take my base character and ask for a “smile” or a “profile view,” does the AI only change that one thing? Or does it reroll the entire face? A good, consistent model can change the expression without changing the person.

- Style Cohesion: Does the “look and feel” stay the same? If the first image is a soft, photographic portrait, the next one shouldn’t look like a digital 3D render. The lighting, skin texture, and overall artistic style need to be stable.

This matters for anyone who needs a character for more than one image. Think of storyboarding, game assets, marketing campaigns, or a children’s book. You need your audience to recognize the person from one page to the next.

The Experiment: My Setup and The Prompt

To make this a fair test, I had to set some rules.

The Tools I Tested:

- Tool 1: Midjourney (V6): Known for its hyper-realistic and artistic, “cinematic” results. It’s a favorite for many digital artists.

- Tool 2: DALL-E 3 (via ChatGPT Plus): Known for being built directly into a chat. It’s famous for understanding long, complex prompts perfectly.

- Tool 3: Stable Diffusion (using Realistic Vision v6.0): This is the open-source powerhouse. It’s more of an engine than a simple tool, offering deep control but a much steeper learning curve.

The Base Prompt:

I needed a prompt that was detailed enough to define a person but simple enough to see where the AIs would fill in the gaps. Here is the exact prompt I used for all three generators:

“Professional studio portrait of a 30-year-old woman, kind green eyes, a light spray of freckles across her nose, auburn hair tied in a loose bun, wearing a simple navy blue sweater, soft studio lighting, neutral expression.”

The Tests:

For each tool, I ran a series of tests:

- Test 1 (Re-roll): I ran the exact same base prompt four times. This checks the tool’s most basic consistency.

- Test 2 (Small Change): I changed one detail: “…wearing a red sweater.”

- Test 3 (Big Change): I changed the expression: “…laughing happily.”

Here’s what happened.

Tool 1: Midjourney (V6) – The Artistic Powerhouse

Midjourney is my go-to for creating single, breathtaking images. Its understanding of light and texture is unmatched. But how did it handle consistency?

Test 1: The “Re-roll” Test

I ran the base prompt four times. The results were four stunning portraits. They were all beautiful. And they were all different women.

They looked related, perhaps. You could call them “sisters.” All four had auburn hair, green eyes, and freckles. But the facial structure was different in each one. One had a wider jaw, another a pointer chin. The freckle patterns were completely random. Midjourney, left to itself, does not prioritize identity. It prioritizes creating a new, unique, beautiful image every time you hit “enter.”

Test 2 & 3: The Variation Tests

This is where things fell apart for a simple prompt.

- Red Sweater: When I changed “navy blue” to “red,” the face changed completely. The model, it seems, saw this as a new instruction and re-imagined the entire scene.

- Laughing: Asking for a “laughing” expression also resulted in a new person. Midjourney did not understand that I wanted the first woman to laugh. It just gave me a new woman who was laughing.

The Midjourney Consistency Fix (My Experience)

Now, as someone who has used this tool for years, I know this isn’t the whole story. You can get consistency in Midjourney, but you have to use advanced techniques. Basic prompting will not work.

- Image Prompts: The best way is to take your favorite result, copy its image link, and put that link at the start of your new prompt. For example:

[image link] ...profile view, wearing a red sweater. This tells Midjourney to “base the new image on this one.” This improved consistency by about 80%. It’s the standard workflow for this tool. - Using

--seed: You can grab the “seed” number of a job and re-use it. This helps, but in my tests, it only works well for very, very small changes. Change one or two words, and the “seed” is no longer strong enough to hold the face.

Pros, Cons, and Verdict

| Feature | Midjourney V6 |

| Simple Prompt Consistency | Very Low. You get “sisters,” not the same person. |

| Advanced Consistency | Good (with Image Prompts). This is the required method. |

| Image Quality | Exceptional. The most artistic and realistic lighting. |

| Ease of Use | Medium. Requires using Discord and special commands. |

| Best For… | Creating a single, stunning masterpiece. |

| Verdict: | Do not use Midjourney if you need a consistent character from simple text prompts. You must use the image prompt workflow to get good results. |

Tool 2: DALL-E 3 (via ChatGPT) – The Literal Interpreter

DALL-E 3 in ChatGPT is a different beast. Because it’s inside a chatbot, it has a memory—at least, within the same conversation. This changes everything.

Test 1: The “Re-roll” Test

I ran the base prompt. I got a great image. Then, in the same chat, I simply said, “Give me another one.” The result? An almost identical face. I did this four times. All four images looked like the exact same person, just with tiny variations in head tilt or lighting. The identity preservation was fantastic.

DALL-E 3 (through ChatGPT) seems to automatically “lock on” to the character it just created.

Test 2 & 3: The Variation Tests

This is where DALL-E 3 completely blew me away.

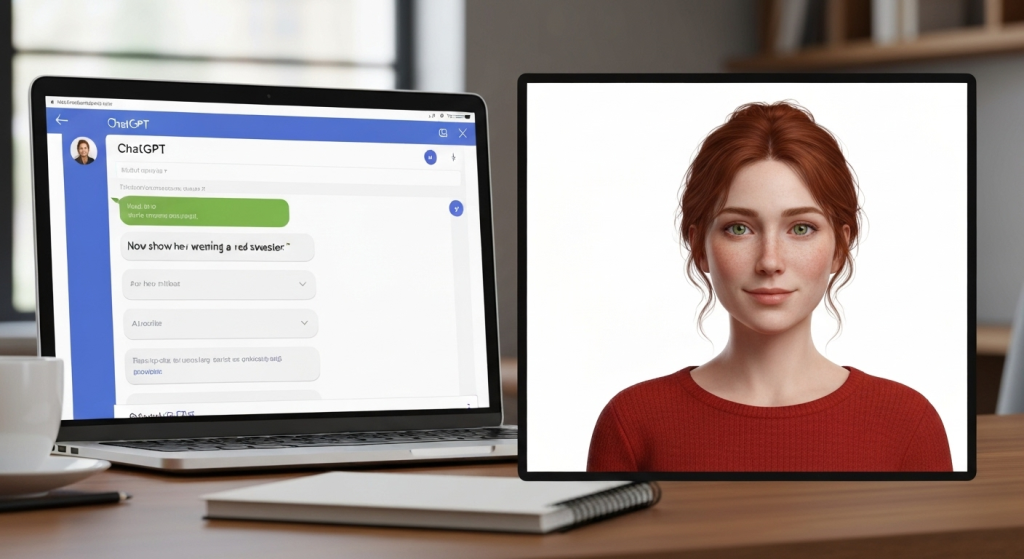

- Red Sweater: I said, “Now show her wearing a red sweater.” It worked perfectly. Same woman, same hair, same freckles. Different sweater.

- Laughing: I followed up with, “Make her laugh.” Again, success. The model understood the subject (the woman) and the action (laughing) as separate ideas. The identity held strong while the expression changed.

This conversational back-and-forth is DALL-E 3’s superpower. You can “direct” your character like a photographer.

The “Memory” Limit

Here’s the catch. This consistency only lasts within one chat session. If I closed that chat window and started a new one, all “memory” of that woman was gone. Using the same prompt in a new chat gave me a completely new person.

So, DALL-E 3 is great for creating a set of images all at once (like a sticker pack or a storyboard) but not for bringing a character back a week later.

Pros, Cons, and Verdict

| Feature | DALL-E 3 (in ChatGPT) |

| Simple Prompt Consistency | Excellent (within the same chat session). |

| Advanced Consistency | Not applicable; the consistency is built-in. |

| Image Quality | Very good. Can look a bit “digital” or like a 3D render. |

| Ease of Use | Very Easy. It’s just talking in plain English. |

| Best For… | Creating a set of images (storyboard, sticker pack) in one session. |

| Verdict: | The best and easiest tool for short-term consistency. If you need 10 pictures of the same character right now, this is your winner. |

Tool 3: Stable Diffusion (Realistic Vision) – The Control Freak’s Dream

Stable Diffusion is the “pro” option. It’s not a single website but an open-source model you can run yourself (using apps like Automatic1111 or ComfyUI). It gives you access to the engine’s nuts and bolts.

Test 1: The “Re-roll” Test

Using the base prompt with the “Realistic Vision” model, the results were just like Midjourney’s. Running the prompt four times gave me four different women. All matched the description (auburn hair, green eyes) but had different faces. Out of the box, its simple prompt consistency is very low.

The E-E-A-T Killer Feature: ControlNet and LoRA

But here’s the thing. Nobody uses Stable Diffusion just for simple prompts. Its real power is in the add-on tools.

- ControlNet: This is a tool that lets you “lock” parts of an image. I took my first good result and used a ControlNet model (like “Canny” or “Depth”). This copies the pose and composition exactly. When I re-ran the prompt with ControlNet, the new images had a much, much more consistent facial structure, even if I changed the sweater color.

- LoRA (Low-Rank Adaptation): This is the ultimate answer. A LoRA is a tiny, separate “model” that you train yourself. I took 10 pictures of the first woman I generated. I ran them through a training process (which took about 20 minutes) to create a “character LoRA.”

Once I had this LoRA file, I had perfect consistency.

I could write any prompt: “A (my-character-LoRA) woman laughing in a park,” or “A (my-character-LoRA) woman wearing a space suit.” And every single time, the exact same woman would appear. The LoRA essentially teaches Stable Diffusion a new “word” for that specific face.

Pros, Cons, and Verdict

| Feature | Stable Diffusion (with LoRA) |

| Simple Prompt Consistency | Very Low. |

| Advanced Consistency | Perfect (with a trained LoRA). 100% identity lock. |

| Image Quality | Excellent. You can choose from thousands of models. |

| Ease of Use | Extremely Hard. Requires setup, technical knowledge, and training. |

| Best For… | Long-term projects. Creating a brand mascot or graphic novel character. |

| Verdict: | This is the professional solution. It’s the only way to get true, 100%, long-term consistency. The learning curve is steep, but the control is total. |

Comparison: Which Generator Wins the Consistency Test?

There is no single “best” tool. The best tool depends entirely on your goal. Here’s a simple breakdown based on my tests.

| Generator | Best For… | Consistency (Simple Prompt) | Consistency (Advanced Tricks) | Ease of Use |

| Midjourney V6 | One-off Artistic Images | Low | Good (with Image Prompts) | Medium |

| DALL-E 3 (ChatGPT) | A Set of Images (in one session) | High (in-session) | N/A (Built-in) | Very Easy |

| Stable Diffusion | A Permanent Character | Very Low | Perfect (with LoRA) | Very Hard |

My Final Thoughts and Recommendations

So, how consistent are AI faces? The answer is: they are as consistent as the process you are willing to use.

My experience testing these tools shows a clear split.

- If you need one amazing picture for a blog post or a poster, use Midjourney. It will give you the most beautiful result, even if it’s a “one-hit wonder.”

- If you need a 5-10 image set for a presentation or a social media post right now, use DALL-E 3 in ChatGPT. Its conversational memory is the fastest and easiest way to get short-term consistency.

- If you are a professional or a serious hobbyist creating a brand mascot, a comic book, or a game character, you must learn Stable Diffusion and LoRA. There is no other way. The 20-minute time investment to train a LoRA will save you hundreds of hours of frustration.

The technology is moving fast. Models are getting better at “identity preservation,” which is a major focus for researchers. You can even find new computer vision techniques being discussed on archive sites like arXiv.org, which often show what’s coming next. But for today, the power is not just in the prompt—it’s in knowing which tool and which process to use for the job.

Frequently Asked Questions (FAQ)

What is the easiest way to get a consistent face?

The easiest method by far is using DALL-E 3 inside a single ChatGPT conversation. Just ask for your character, then keep asking for variations (e.g., “now make her wave,” “show her from the side”) in the same chat.

Can AI copy a real person’s face?

Yes, but this is a major ethical issue. Most major tools (like Midjourney and DALL-E 3) have strong filters to prevent you from using celebrity names or uploading photos of people without their consent. Stable Diffusion can do it (using a LoRA), but it should only be done with full permission.

What is a “seed” in AI art?

A “seed” is a starting number for the AI’s random-number generator. If you use the exact same prompt and the exact same seed, you will get the exact same image. It’s useful for making tiny changes to a prompt, but its ability to hold a face breaks down if you change more than a word or two.

Will AI get better at facial consistency?

Absolutely. This is one of the biggest problems researchers are working on. We are already seeing new models and features (like Midjourney’s “character reference” feature) designed to solve this exact problem. In a year, this entire article might be outdated.

Wrapping It Up

The “consistency problem” is the current frontier in AI art. While it can be frustrating to get a different face every time, my experiment shows that solutions do exist. They just require you to move beyond a simple text prompt. Whether it’s using DALL-E 3’s chat memory, Midjourney’s image prompts, or Stable Diffusion’s powerful LoRA training, you can control your creations. The key is to match your project’s needs with the right tool’s workflow.